CSLA.NET is a framework that provides standardized business logic functionality to your applications. It includes a rules engine, business object persistence, and more. I first encountered it on a project at my current job.

My initial impression of CSLA was that it was intrusive. It requires you to derive all your editable business objects from a base class, which violates the “composition over inheritance” principle. Then there’s the DataPortal, an object that wants to manage all your data access. I found that many of the BOs in our application could only be created via DataPortal_Create() or DataPortal_Fetch(). On the surface it appears we have very little control over the BO life cycle.

After feeling the pain of trying to unit test a bunch of existing BusinessBase-inheriting classes, I set out to find a way to use dependency injection with CSLA. Interestingly, DI is mentioned in the CslaFastStart sample, but there are no details on how to utilize it. I didn’t want to resort to service location, so I searched until I found an article on magenic.com called “Abstractions in CSLA” which offers a clean implementation of DI using Autofac.

I started with a slightly modified CslaFastStart, and applied a simplified version of the approach from the Magenic article. I’ll share some of the highlights of this process.

Like the Fast Start example, let’s assume we have a business object called Person that inherits from BusinessBase. (The original code names it PersonEdit, but it’s often considered bad practice to include verbs in your class names.) As you can see, we have a few registered properties along with our DataPortal override methods. These methods instantiate a PersonRepository (PersonDal in the original) to do their work.

[Serializable]

public class Person : BusinessBase

{

public static readonly PropertyInfo IdProperty = RegisterProperty(c => c.Id);

public int Id

{

get => GetProperty(IdProperty);

private set => LoadProperty(IdProperty, value);

}

public static readonly PropertyInfo FirstNameProperty = RegisterProperty(c => c.FirstName);

[Required]

public string FirstName

{

get => GetProperty(FirstNameProperty);

set => SetProperty(FirstNameProperty, value);

}

public static readonly PropertyInfo LastNameProperty = RegisterProperty(c => c.LastName);

[Required]

public string LastName

{

get => GetProperty(LastNameProperty);

set => SetProperty(LastNameProperty, value);

}

public static readonly PropertyInfo LastSavedDateProperty = RegisterProperty(c => c.LastSavedDate);

public DateTime? LastSavedDate

{

get => GetProperty(LastSavedDateProperty);

private set => SetProperty(LastSavedDateProperty, value);

}

protected override void DataPortal_Create()

{

var personRepository = new PersonRepository();

var dto = personRepository.Create();

using (BypassPropertyChecks)

{

Id = dto.Id;

FirstName = dto.FirstName;

LastName = dto.LastName;

}

BusinessRules.CheckRules();

}

protected override void DataPortal_Insert()

{

using (BypassPropertyChecks)

{

LastSavedDate = DateTime.Now;

var dto = new PersonDto

{

FirstName = FirstName,

LastName = LastName,

LastSavedDate = LastSavedDate

};

var personRepository = new PersonRepository();

Id = personRepository.InsertPerson(dto);

}

}

protected override void DataPortal_Update()

{

using (BypassPropertyChecks)

{

LastSavedDate = DateTime.Now;

var dto = new PersonDto

{

Id = Id,

FirstName = FirstName,

LastName = LastName,

LastSavedDate = LastSavedDate

};

var personRepository = new PersonRepository();

personRepository.UpdatePerson(dto);

}

}

private void DataPortal_Delete(int id)

{

using (BypassPropertyChecks)

{

var personRepository = new PersonRepository();

personRepository.DeletePerson(id);

}

}

protected override void DataPortal_DeleteSelf()

{

DataPortal_Delete(Id);

}

}

In our Program.Main(), we create a new person via the DataPortal, assign property values based on user input, do some validation, and save if possible. Nothing unusual here, and when you run the program it all works as expected.

public class Program

{

public static void Main()

{

Console.WriteLine("Creating a new person");

var person = DataPortal.Create();

Console.Write("Enter first name: ");

person.FirstName = Console.ReadLine();

Console.Write("Enter last name: ");

person.LastName = Console.ReadLine();

if (person.IsSavable)

{

person = person.Save();

Console.WriteLine($"Added person with id {person.Id}. First name = '{person.FirstName}', last name = '{person.LastName}'.");

Console.WriteLine($"Last saved date: {person.LastSavedDate}");

}

else

{

Console.WriteLine("Invalid entry");

foreach (var item in person.BrokenRulesCollection)

{

Console.WriteLine(item.Description);

}

Console.ReadKey();

return;

}

Console.ReadKey();

}

}

The problem comes when we try to write unit tests. Let’s create a test that checks the LastSavedDate property when the Person is saved. We’ll allow one minute of leeway in our assertion, since it takes time for the test to run. Ideally we would fake DateTime.Now as well, but it doesn’t really matter in this contrived example.

[TestFixture]

public class PersonTests

{

[Test]

public void LastSavedDate_GivenPersonIsSaved_ReturnsCurrentTime()

{

// Arrange

var person = new Person

{

FirstName = "Jane",

LastName = "Doe"

};

person = person.Save();

// Act

var lastSavedDate = DateTime.Now;

if (person.LastSavedDate != null)

{

lastSavedDate = person.LastSavedDate.Value;

}

// Assert

// Allow up to one minute for test to run.

Assert.LessOrEqual(DateTime.Now.Subtract(lastSavedDate).TotalMinutes, 1);

}

}

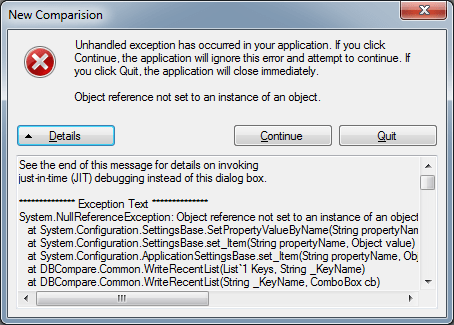

When we run the test we get an exception relating to the connection string. It’s stored in a configuration file in the data access layer which is inaccessible from the test project, which causes the error. The Person class creates new instances of the PersonRepository directly, which introduces tight coupling and makes testing difficult.

Csla.DataPortalException : DataPortal.Update failed (Valid connection string not found.)

----> Csla.Reflection.CallMethodException : Person.DataPortal_Insert method call failed

----> System.Exception : Valid connection string not found.

So what can we do? If we want to inject the repository as a dependency, we need to hand control of our business object creation over to our IoC container, which in this case would be Autofac. This can be done via a custom DataPortalActivator which we’ll call AutofacDataPortalActivator.

public class AutofacDataPortalActivator : IDataPortalActivator

{

private readonly IContainer _container;

public AutofacDataPortalActivator(IContainer container)

{

_container = container ?? throw new ArgumentNullException(nameof(container));

}

public object CreateInstance(Type requestedType)

{

if (requestedType == null)

throw new ArgumentNullException(nameof(requestedType));

return Activator.CreateInstance(requestedType);

}

public void InitializeInstance(object obj)

{

if (obj == null)

throw new ArgumentNullException(nameof(obj));

var scope = _container.BeginLifetimeScope();

((IScopedBusiness)obj).Scope = scope;

scope.InjectProperties(obj);

}

public void FinalizeInstance(object obj)

{

if (obj == null)

throw new ArgumentNullException(nameof(obj));

((IScopedBusiness)obj).Scope.Dispose();

}

public Type ResolveType(Type requestedType)

{

if (requestedType == null)

throw new ArgumentNullException(nameof(requestedType));

return requestedType;

}

}

The most notable part of this class is the InjectProperties call, which uses the registered components to inject properties into a BO instance.

In Program.cs, we assign a new data portal activator at the top, and configure our IoC container at the bottom. There you’ll see an IPersonRepository interface that resolves to a new PersonRepository instance.

public class Program

{

public static void Main()

{

ApplicationContext.DataPortalActivator = new AutofacDataPortalActivator(CreateContainer());

Console.WriteLine("Creating a new person");

var person = DataPortal.Create();

Console.Write("Enter first name: ");

person.FirstName = Console.ReadLine();

Console.Write("Enter last name: ");

person.LastName = Console.ReadLine();

if (person.IsSavable)

{

person = person.Save();

Console.WriteLine($"Added person with id {person.Id}. First name = '{person.FirstName}', last name = '{person.LastName}'.");

Console.WriteLine($"Last saved date: {person.LastSavedDate}");

}

else

{

Console.WriteLine("Invalid entry");

foreach (var item in person.BrokenRulesCollection)

{

Console.WriteLine(item.Description);

}

Console.ReadKey();

return;

}

Console.ReadKey();

}

private static IContainer CreateContainer()

{

var builder = new ContainerBuilder();

builder.RegisterInstance(new PersonRepository());

return builder.Build();

}

}

We’ll also need to make some changes to the Person class. We now inherit from ScopedBusinessBase because it gives us the IScopedBusiness interface we need to make our data portal activator work. And the PersonRepository concrete class instances are replaced with an IPersonRepository property.

[Serializable]

public class Person : ScopedBusinessBase

{

public IPersonRepository PersonRepository { get; set; }

public static readonly PropertyInfo IdProperty = RegisterProperty(c => c.Id);

public int Id

{

get => GetProperty(IdProperty);

private set => LoadProperty(IdProperty, value);

}

public static readonly PropertyInfo FirstNameProperty = RegisterProperty(c => c.FirstName);

[Required]

public string FirstName

{

get => GetProperty(FirstNameProperty);

set => SetProperty(FirstNameProperty, value);

}

public static readonly PropertyInfo LastNameProperty = RegisterProperty(c => c.LastName);

[Required]

public string LastName

{

get => GetProperty(LastNameProperty);

set => SetProperty(LastNameProperty, value);

}

public static readonly PropertyInfo LastSavedDateProperty = RegisterProperty(c => c.LastSavedDate);

public DateTime? LastSavedDate

{

get => GetProperty(LastSavedDateProperty);

private set => SetProperty(LastSavedDateProperty, value);

}

protected override void DataPortal_Create()

{

var dto = PersonRepository.Create();

using (BypassPropertyChecks)

{

Id = dto.Id;

FirstName = dto.FirstName;

LastName = dto.LastName;

}

BusinessRules.CheckRules();

}

...

protected override void DataPortal_DeleteSelf()

{

DataPortal_Delete(Id);

}

}

And what about our test? Since the Person repository is now exposed as a public property of the Person, we can assign a mock with a minimal implementation. Only one additional line of code is needed.

[TestFixture]

public class PersonTests

{

[Test]

public void LastSavedDate_GivenPersonIsSaved_ReturnsCurrentTime()

{

// Arrange

var person = new Person

{

PersonRepository = new MockPersonRepository(),

FirstName = "Jane",

LastName = "Doe"

};

person = person.Save();

// Act

var lastSavedDate = DateTime.Now;

if (person.LastSavedDate != null)

{

lastSavedDate = person.LastSavedDate.Value;

}

// Assert

// Allow up to one minute for test to run.

Assert.LessOrEqual(DateTime.Now.Subtract(lastSavedDate).TotalMinutes, 1);

}

}

Throughout this experiment I’ve tried to find the simplest solution that works. That means the code may not be fully robust or production-ready. But it does illustrate that using DI with CSLA.NET is possible without too much trouble after the initial setup. I’m not sure I would use CSLA for greenfield projects, but at least the latest versions are adequately configurable.